Idempotency

The idempotency utility provides a simple solution to convert your Lambda functions into idempotent operations which are safe to retry.

Key features¶

- Prevent Lambda handler from executing more than once on the same event payload during a time window

- Ensure Lambda handler returns the same result when called with the same payload

- Select a subset of the event as the idempotency key using JMESPath expressions

- Set a time window in which records with the same payload should be considered duplicates

- Expires in-progress executions if the Lambda function times out halfway through

- Support Amazon DynamoDB and Redis as persistence layers

Terminology¶

The property of idempotency means that an operation does not cause additional side effects if it is called more than once with the same input parameters.

Idempotent operations will return the same result when they are called multiple times with the same parameters. This makes idempotent operations safe to retry.

Idempotency key is a hash representation of either the entire event or a specific configured subset of the event, and invocation results are JSON serialized and stored in your persistence storage layer.

Idempotency record is the data representation of an idempotent request saved in your preferred storage layer. We use it to coordinate whether a request is idempotent, whether it's still valid or expired based on timestamps, etc.

classDiagram

direction LR

class IdempotencyRecord {

idempotency_key str

status Status

expiry_timestamp int

in_progress_expiry_timestamp int

response_data Json~str~

payload_hash str

}

class Status {

<<Enumeration>>

INPROGRESS

COMPLETE

EXPIRED internal_only

}

IdempotencyRecord -- StatusIdempotency record representation

Getting started¶

Note

This section uses DynamoDB as the default idempotent persistence storage layer. If you are interested in using Redis as the persistence storage layer, check out the Redis as persistence storage layer Section.

IAM Permissions¶

Your Lambda function IAM Role must have dynamodb:GetItem, dynamodb:PutItem, dynamodb:UpdateItem and dynamodb:DeleteItem IAM permissions before using this feature.

Note

If you're using our example AWS Serverless Application Model (SAM), AWS Cloud Development Kit (CDK), or Terraform it already adds the required permissions.

Required resources¶

Before getting started, you need to create a persistent storage layer where the idempotency utility can store its state - your lambda functions will need read and write access to it.

We currently support Amazon DynamoDB and Redis as a storage layer. The following example demonstrates how to create a table in DynamoDB. If you prefer to use Redis, refer go to the section RedisPersistenceLayer section.

Default table configuration

If you're not changing the default configuration for the DynamoDB persistence layer, this is the expected default configuration:

| Configuration | Value | Notes |

|---|---|---|

| Partition key | id |

|

| TTL attribute name | expiration |

This can only be configured after your table is created if you're using AWS Console |

Tip: You can share a single state table for all functions

You can reuse the same DynamoDB table to store idempotency state. We add module_name and qualified name for classes and functions in addition to the idempotency key as a hash key.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 | |

Warning: Large responses with DynamoDB persistence layer

When using this utility with DynamoDB, your function's responses must be smaller than 400KB.

Larger items cannot be written to DynamoDB and will cause exceptions. If your response exceeds 400kb, consider using Redis as your persistence layer.

Info: DynamoDB

During the first invocation with a payload, the Lambda function executes both a PutItem and an UpdateItem operations to store the data in DynamoDB. If the result returned by your Lambda is less than 1kb, you can expect 2 WCUs per Lambda invocation.

On subsequent invocations with the same payload, you can expect just 1 PutItem request to DynamoDB.

Note: While we try to minimize requests to DynamoDB to 1 per invocation, if your boto3 version is lower than 1.26.194, you may experience 2 requests in every invocation. Ensure to check your boto3 version and review the DynamoDB pricing documentation to estimate the cost.

Idempotent decorator¶

You can quickly start by initializing the DynamoDBPersistenceLayer class and using it with the idempotent decorator on your lambda handler.

Note

In this example, the entire Lambda handler is treated as a single idempotent operation. If your Lambda handler can cause multiple side effects, or you're only interested in making a specific logic idempotent, use idempotent_function instead.

See Choosing a payload subset for idempotency for more elaborate use cases.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 | |

1 2 3 4 | |

After processing this request successfully, a second request containing the exact same payload above will now return the same response, ensuring our customer isn't charged twice.

New to idempotency concept? Please review our Terminology section if you haven't yet.

Idempotent_function decorator¶

Similar to idempotent decorator, you can use idempotent_function decorator for any synchronous Python function.

When using idempotent_function, you must tell us which keyword parameter in your function signature has the data we should use via data_keyword_argument.

We support JSON serializable data, Python Dataclasses, Parser/Pydantic Models, and our Event Source Data Classes.

Limitation

Make sure to call your decorated function using keyword arguments.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | |

Output serialization¶

By default, idempotent_function serializes, stores, and returns your annotated function's result as a JSON object. You can change this behavior using output_serializer parameter.

The output serializer supports any JSON serializable data, Python Dataclasses and Pydantic Models.

When using the output_serializer parameter, the data will continue to be stored in DynamoDB as a JSON object.

You can use PydanticSerializer to automatically serialize what's retrieved from the persistent storage based on the return type annotated.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 | |

- We'll use

OrderOutputto instantiate a new object using the data retrieved from persistent storage as input.

This ensures the return of the function is not impacted when@idempotent_functionis used.

Alternatively, you can provide an explicit model as an input to PydanticSerializer.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 | |

You can use DataclassSerializer to automatically serialize what's retrieved from the persistent storage based on the return type annotated.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 | |

- We'll use

OrderOutputto instantiate a new object using the data retrieved from persistent storage as input.

This ensures the return of the function is not impacted when@idempotent_functionis used.

Alternatively, you can provide an explicit model as an input to DataclassSerializer.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 | |

You can use CustomDictSerializer to have full control over the serialization process for any type. It expects two functions:

- to_dict. Function to convert any type to a JSON serializable dictionary before it saves into the persistent storage.

- from_dict. Function to convert from a dictionary retrieved from persistent storage and serialize in its original form.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 | |

- This function does the following

1. Receives the return fromprocess_order

2. Converts to dictionary before it can be saved into the persistent storage. - This function does the following

1. Receives the dictionary saved into the persistent storage

1 Serializes toOrderOutputbefore@idempotentreturns back to the caller. - This serializer receives both functions so it knows who to call when to serialize to and from dictionary.

Batch integration¶

You can can easily integrate with Batch utility via context manager. This ensures that you process each record in an idempotent manner, and guard against a Lambda timeout idempotent situation.

Choosing an unique batch record attribute

In this example, we choose messageId as our idempotency key since we know it'll be unique.

Depending on your use case, it might be more accurate to choose another field your producer intentionally set to define uniqueness.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | |

Choosing a payload subset for idempotency¶

Tip: Dealing with always changing payloads

When dealing with a more elaborate payload, where parts of the payload always change, you should use event_key_jmespath parameter.

Use IdempotencyConfig to instruct the idempotent decorator to only use a portion of your payload to verify whether a request is idempotent, and therefore it should not be retried.

Payment scenario

In this example, we have a Lambda handler that creates a payment for a user subscribing to a product. We want to ensure that we don't accidentally charge our customer by subscribing them more than once.

Imagine the function executes successfully, but the client never receives the response due to a connection issue. It is safe to retry in this instance, as the idempotent decorator will return a previously saved response.

What we want here is to instruct Idempotency to use user_id and product_id fields from our incoming payload as our idempotency key.

If we were to treat the entire request as our idempotency key, a simple HTTP header change would cause our customer to be charged twice.

Deserializing JSON strings in payloads for increased accuracy.

The payload extracted by the event_key_jmespath is treated as a string by default.

This means there could be differences in whitespace even when the JSON payload itself is identical.

To alter this behaviour, we can use the JMESPath built-in function powertools_json() to treat the payload as a JSON object (dict) rather than a string.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | |

Lambda timeouts¶

Note

This is automatically done when you decorate your Lambda handler with @idempotent decorator.

To prevent against extended failed retries when a Lambda function times out, Powertools for AWS Lambda (Python) calculates and includes the remaining invocation available time as part of the idempotency record.

Example

If a second invocation happens after this timestamp, and the record is marked as INPROGRESS, we will execute the invocation again as if it was in the EXPIRED state (e.g, expire_seconds field elapsed).

This means that if an invocation expired during execution, it will be quickly executed again on the next retry.

Important

If you are only using the @idempotent_function decorator to guard isolated parts of your code,

you must use register_lambda_context available in the idempotency config object to benefit from this protection.

Here is an example on how you register the Lambda context in your handler:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | |

Handling exceptions¶

If you are using the idempotent decorator on your Lambda handler, any unhandled exceptions that are raised during the code execution will cause the record in the persistence layer to be deleted.

This means that new invocations will execute your code again despite having the same payload. If you don't want the record to be deleted, you need to catch exceptions within the idempotent function and return a successful response.

sequenceDiagram

participant Client

participant Lambda

participant Persistence Layer

Client->>Lambda: Invoke (event)

Lambda->>Persistence Layer: Get or set (id=event.search(payload))

activate Persistence Layer

Note right of Persistence Layer: Locked during this time. Prevents multiple<br/>Lambda invocations with the same<br/>payload running concurrently.

Lambda--xLambda: Call handler (event).<br/>Raises exception

Lambda->>Persistence Layer: Delete record (id=event.search(payload))

deactivate Persistence Layer

Lambda-->>Client: Return error responseIf you are using idempotent_function, any unhandled exceptions that are raised inside the decorated function will cause the record in the persistence layer to be deleted, and allow the function to be executed again if retried.

If an Exception is raised outside the scope of the decorated function and after your function has been called, the persistent record will not be affected. In this case, idempotency will be maintained for your decorated function. Example:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | |

Warning

We will raise IdempotencyPersistenceLayerError if any of the calls to the persistence layer fail unexpectedly.

As this happens outside the scope of your decorated function, you are not able to catch it if you're using the idempotent decorator on your Lambda handler.

Persistence layers¶

DynamoDBPersistenceLayer¶

This persistence layer is built-in, allowing you to use an existing DynamoDB table or create a new one dedicated to idempotency state (recommended).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | |

When using DynamoDB as the persistence layer, you can customize the attribute names by passing the following parameters during the initialization of the persistence layer:

| Parameter | Required | Default | Description |

|---|---|---|---|

| table_name |  |

Table name to store state | |

| key_attr | id |

Partition key of the table. Hashed representation of the payload (unless sort_key_attr is specified) | |

| expiry_attr | expiration |

Unix timestamp of when record expires | |

| in_progress_expiry_attr | in_progress_expiration |

Unix timestamp of when record expires while in progress (in case of the invocation times out) | |

| status_attr | status |

Stores status of the lambda execution during and after invocation | |

| data_attr | data |

Stores results of successfully executed Lambda handlers | |

| validation_key_attr | validation |

Hashed representation of the parts of the event used for validation | |

| sort_key_attr | Sort key of the table (if table is configured with a sort key). | ||

| static_pk_value | idempotency#{LAMBDA_FUNCTION_NAME} |

Static value to use as the partition key. Only used when sort_key_attr is set. |

RedisPersistenceLayer¶

This persistence layer is built-in, allowing you to use an existing Redis service. For optimal performance and compatibility, it is strongly recommended to use a Redis service version 7 or higher.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | |

When using Redis as the persistence layer, you can customize the attribute names by providing the following parameters upon initialization of the persistence layer:

| Parameter | Required | Default | Description |

|---|---|---|---|

| in_progress_expiry_attr | in_progress_expiration |

Unix timestamp of when record expires while in progress (in case of the invocation times out) | |

| status_attr | status |

Stores status of the Lambda execution during and after invocation | |

| data_attr | data |

Stores results of successfully executed Lambda handlers | |

| validation_key_attr | validation |

Hashed representation of the parts of the event used for validation |

Idempotency request flow¶

The following sequence diagrams explain how the Idempotency feature behaves under different scenarios.

Successful request¶

sequenceDiagram

participant Client

participant Lambda

participant Persistence Layer

alt initial request

Client->>Lambda: Invoke (event)

Lambda->>Persistence Layer: Get or set idempotency_key=hash(payload)

activate Persistence Layer

Note over Lambda,Persistence Layer: Set record status to INPROGRESS. <br> Prevents concurrent invocations <br> with the same payload

Lambda-->>Lambda: Call your function

Lambda->>Persistence Layer: Update record with result

deactivate Persistence Layer

Persistence Layer-->>Persistence Layer: Update record

Note over Lambda,Persistence Layer: Set record status to COMPLETE. <br> New invocations with the same payload <br> now return the same result

Lambda-->>Client: Response sent to client

else retried request

Client->>Lambda: Invoke (event)

Lambda->>Persistence Layer: Get or set idempotency_key=hash(payload)

activate Persistence Layer

Persistence Layer-->>Lambda: Already exists in persistence layer.

deactivate Persistence Layer

Note over Lambda,Persistence Layer: Record status is COMPLETE and not expired

Lambda-->>Client: Same response sent to client

endSuccessful request with cache enabled¶

sequenceDiagram

participant Client

participant Lambda

participant Persistence Layer

alt initial request

Client->>Lambda: Invoke (event)

Lambda->>Persistence Layer: Get or set idempotency_key=hash(payload)

activate Persistence Layer

Note over Lambda,Persistence Layer: Set record status to INPROGRESS. <br> Prevents concurrent invocations <br> with the same payload

Lambda-->>Lambda: Call your function

Lambda->>Persistence Layer: Update record with result

deactivate Persistence Layer

Persistence Layer-->>Persistence Layer: Update record

Note over Lambda,Persistence Layer: Set record status to COMPLETE. <br> New invocations with the same payload <br> now return the same result

Lambda-->>Lambda: Save record and result in memory

Lambda-->>Client: Response sent to client

else retried request

Client->>Lambda: Invoke (event)

Lambda-->>Lambda: Get idempotency_key=hash(payload)

Note over Lambda,Persistence Layer: Record status is COMPLETE and not expired

Lambda-->>Client: Same response sent to client

endExpired idempotency records¶

sequenceDiagram

participant Client

participant Lambda

participant Persistence Layer

alt initial request

Client->>Lambda: Invoke (event)

Lambda->>Persistence Layer: Get or set idempotency_key=hash(payload)

activate Persistence Layer

Note over Lambda,Persistence Layer: Set record status to INPROGRESS. <br> Prevents concurrent invocations <br> with the same payload

Lambda-->>Lambda: Call your function

Lambda->>Persistence Layer: Update record with result

deactivate Persistence Layer

Persistence Layer-->>Persistence Layer: Update record

Note over Lambda,Persistence Layer: Set record status to COMPLETE. <br> New invocations with the same payload <br> now return the same result

Lambda-->>Client: Response sent to client

else retried request

Client->>Lambda: Invoke (event)

Lambda->>Persistence Layer: Get or set idempotency_key=hash(payload)

activate Persistence Layer

Persistence Layer-->>Lambda: Already exists in persistence layer.

deactivate Persistence Layer

Note over Lambda,Persistence Layer: Record status is COMPLETE but expired hours ago

loop Repeat initial request process

Note over Lambda,Persistence Layer: 1. Set record to INPROGRESS, <br> 2. Call your function, <br> 3. Set record to COMPLETE

end

Lambda-->>Client: Same response sent to client

endConcurrent identical in-flight requests¶

sequenceDiagram

participant Client

participant Lambda

participant Persistence Layer

Client->>Lambda: Invoke (event)

Lambda->>Persistence Layer: Get or set idempotency_key=hash(payload)

activate Persistence Layer

Note over Lambda,Persistence Layer: Set record status to INPROGRESS. <br> Prevents concurrent invocations <br> with the same payload

par Second request

Client->>Lambda: Invoke (event)

Lambda->>Persistence Layer: Get or set idempotency_key=hash(payload)

Lambda--xLambda: IdempotencyAlreadyInProgressError

Lambda->>Client: Error sent to client if unhandled

end

Lambda-->>Lambda: Call your function

Lambda->>Persistence Layer: Update record with result

deactivate Persistence Layer

Persistence Layer-->>Persistence Layer: Update record

Note over Lambda,Persistence Layer: Set record status to COMPLETE. <br> New invocations with the same payload <br> now return the same result

Lambda-->>Client: Response sent to clientLambda request timeout¶

sequenceDiagram

participant Client

participant Lambda

participant Persistence Layer

alt initial request

Client->>Lambda: Invoke (event)

Lambda->>Persistence Layer: Get or set idempotency_key=hash(payload)

activate Persistence Layer

Note over Lambda,Persistence Layer: Set record status to INPROGRESS. <br> Prevents concurrent invocations <br> with the same payload

Lambda-->>Lambda: Call your function

Note right of Lambda: Time out

Lambda--xLambda: Time out error

Lambda-->>Client: Return error response

deactivate Persistence Layer

else retry after Lambda timeout elapses

Client->>Lambda: Invoke (event)

Lambda->>Persistence Layer: Get or set idempotency_key=hash(payload)

activate Persistence Layer

Note over Lambda,Persistence Layer: Set record status to INPROGRESS. <br> Reset in_progress_expiry attribute

Lambda-->>Lambda: Call your function

Lambda->>Persistence Layer: Update record with result

deactivate Persistence Layer

Persistence Layer-->>Persistence Layer: Update record

Lambda-->>Client: Response sent to client

endOptional idempotency key¶

sequenceDiagram

participant Client

participant Lambda

participant Persistence Layer

alt request with idempotency key

Client->>Lambda: Invoke (event)

Lambda->>Persistence Layer: Get or set idempotency_key=hash(payload)

activate Persistence Layer

Note over Lambda,Persistence Layer: Set record status to INPROGRESS. <br> Prevents concurrent invocations <br> with the same payload

Lambda-->>Lambda: Call your function

Lambda->>Persistence Layer: Update record with result

deactivate Persistence Layer

Persistence Layer-->>Persistence Layer: Update record

Note over Lambda,Persistence Layer: Set record status to COMPLETE. <br> New invocations with the same payload <br> now return the same result

Lambda-->>Client: Response sent to client

else request(s) without idempotency key

Client->>Lambda: Invoke (event)

Note over Lambda: Idempotency key is missing

Note over Persistence Layer: Skips any operation to fetch, update, and delete

Lambda-->>Lambda: Call your function

Lambda-->>Client: Response sent to client

endRace condition with Redis¶

graph TD;

A(Existing orphan record in redis)-->A1;

A1[Two Lambda invoke at same time]-->B1[Lambda handler1];

B1-->B2[Fetch from Redis];

B2-->B3[Handler1 got orphan record];

B3-->B4[Handler1 acquired lock];

B4-->B5[Handler1 overwrite orphan record]

B5-->B6[Handler1 continue to execution];

A1-->C1[Lambda handler2];

C1-->C2[Fetch from Redis];

C2-->C3[Handler2 got orphan record];

C3-->C4[Handler2 failed to acquire lock];

C4-->C5[Handler2 wait and fetch from Redis];

C5-->C6[Handler2 return without executing];

B6-->D(Lambda handler executed only once);

C6-->D;Redis as persistent storage layer provider¶

Redis resources¶

Before setting up Redis as the persistent storage layer provider, you must have an existing Redis service. We recommend you to use Redis compatible services such as Amazon ElastiCache for Redis or Amazon MemoryDB for Redis as your persistent storage layer provider.

No existing Redis service?

If you don't have an existing Redis service, we recommend using DynamoDB as the persistent storage layer provider.

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

- Replace the Security Group ID and Subnet ID to match your VPC settings.

VPC Access¶

Your Lambda Function must have network access to the Redis endpoint before using it as the idempotency persistent storage layer. In most cases, you will need to configure VPC access for your Lambda Function.

Amazon ElastiCache/MemoryDB for Redis as persistent storage layer provider

If you plan to use Amazon ElastiCache for Redis as the idempotency persistent storage layer, you may find this AWS tutorial helpful. For those using Amazon MemoryDB for Redis, refer to this AWS tutorial specifically for the VPC setup guidance.

After completing the VPC setup, you can use the templates provided below to set up Lambda functions with access to VPC internal subnets.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

- Replace the Security Group ID and Subnet ID to match your VPC settings.

Configuring Redis persistence layer¶

You can quickly get started by initializing the RedisCachePersistenceLayer class and applying the idempotent decorator to your Lambda handler. For a detailed example of using the RedisCachePersistenceLayer, refer to the Persistence layers section.

Info

We enforce security best practices by using SSL connections in the RedisCachePersistenceLayer; to disable it, set ssl=False

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 | |

1 2 3 4 | |

Custom advanced settings¶

For advanced configurations, such as setting up SSL certificates or customizing parameters like a custom timeout, you can utilize the Redis client to tailor these specific settings to your needs.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | |

- JSON stored: { "REDIS_ENDPOINT": "127.0.0.1", "REDIS_PORT": "6379", "REDIS_PASSWORD": "redis-secret" }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | |

- JSON stored: { "REDIS_ENDPOINT": "127.0.0.1", "REDIS_PORT": "6379", "REDIS_PASSWORD": "redis-secret" }

- redis_user.crt file stored in the "certs" directory of your Lambda function

- redis_user_private.key file stored in the "certs" directory of your Lambda function

- redis_ca.pem file stored in the "certs" directory of your Lambda function

Advanced¶

Customizing the default behavior¶

Idempotent decorator can be further configured with IdempotencyConfig as seen in the previous example. These are the available options for further configuration

| Parameter | Default | Description |

|---|---|---|

| event_key_jmespath | "" |

JMESPath expression to extract the idempotency key from the event record using built-in functions |

| payload_validation_jmespath | "" |

JMESPath expression to validate whether certain parameters have changed in the event while the event payload |

| raise_on_no_idempotency_key | False |

Raise exception if no idempotency key was found in the request |

| expires_after_seconds | 3600 | The number of seconds to wait before a record is expired |

| use_local_cache | False |

Whether to locally cache idempotency results |

| local_cache_max_items | 256 | Max number of items to store in local cache |

| hash_function | md5 |

Function to use for calculating hashes, as provided by hashlib in the standard library. |

Handling concurrent executions with the same payload¶

This utility will raise an IdempotencyAlreadyInProgressError exception if you receive multiple invocations with the same payload while the first invocation hasn't completed yet.

Info

If you receive IdempotencyAlreadyInProgressError, you can safely retry the operation.

This is a locking mechanism for correctness. Since we don't know the result from the first invocation yet, we can't safely allow another concurrent execution.

Using in-memory cache¶

By default, in-memory local caching is disabled, since we don't know how much memory you consume per invocation compared to the maximum configured in your Lambda function.

Note: This in-memory cache is local to each Lambda execution environment

This means it will be effective in cases where your function's concurrency is low in comparison to the number of "retry" invocations with the same payload, because cache might be empty.

You can enable in-memory caching with the use_local_cache parameter:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

1 2 3 | |

When enabled, the default is to cache a maximum of 256 records in each Lambda execution environment - You can change it with the local_cache_max_items parameter.

Expiring idempotency records¶

By default, we expire idempotency records after an hour (3600 seconds).

In most cases, it is not desirable to store the idempotency records forever. Rather, you want to guarantee that the same payload won't be executed within a period of time.

You can change this window with the expires_after_seconds parameter:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

1 2 3 | |

This will mark any records older than 5 minutes as expired, and your function will be executed as normal if it is invoked with a matching payload.

Idempotency record expiration vs DynamoDB time-to-live (TTL)

DynamoDB TTL is a feature to remove items after a certain period of time, it may occur within 48 hours of expiration.

We don't rely on DynamoDB or any persistence storage layer to determine whether a record is expired to avoid eventual inconsistency states.

Instead, Idempotency records saved in the storage layer contain timestamps that can be verified upon retrieval and double checked within Idempotency feature.

Why?

A record might still be valid (COMPLETE) when we retrieved, but in some rare cases it might expire a second later. A record could also be cached in memory. You might also want to have idempotent transactions that should expire in seconds.

Payload validation¶

Question: What if your function is invoked with the same payload except some outer parameters have changed?

Example: A payment transaction for a given productID was requested twice for the same customer, however the amount to be paid has changed in the second transaction.

By default, we will return the same result as it returned before, however in this instance it may be misleading; we provide a fail fast payload validation to address this edge case.

With payload_validation_jmespath, you can provide an additional JMESPath expression to specify which part of the event body should be validated against previous idempotent invocations

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | |

1 2 3 4 5 6 | |

1 2 3 4 5 6 | |

In this example, the user_id and product_id keys are used as the payload to generate the idempotency key, as per event_key_jmespath parameter.

Note

If we try to send the same request but with a different amount, we will raise IdempotencyValidationError.

Without payload validation, we would have returned the same result as we did for the initial request. Since we're also returning an amount in the response, this could be quite confusing for the client.

By using payload_validation_jmespath="amount", we prevent this potentially confusing behavior and instead raise an Exception.

Making idempotency key required¶

If you want to enforce that an idempotency key is required, you can set raise_on_no_idempotency_key to True.

This means that we will raise IdempotencyKeyError if the evaluation of event_key_jmespath is None.

Warning

To prevent errors, transactions will not be treated as idempotent if raise_on_no_idempotency_key is set to False and the evaluation of event_key_jmespath is None. Therefore, no data will be fetched, stored, or deleted in the idempotency storage layer.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

1 2 3 4 5 6 7 | |

1 2 3 4 5 6 7 | |

Customizing boto configuration¶

The boto_config and boto3_session parameters enable you to pass in a custom botocore config object or a custom boto3 session when constructing the persistence store.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | |

1 2 3 | |

Using a DynamoDB table with a composite primary key¶

When using a composite primary key table (hash+range key), use sort_key_attr parameter when initializing your persistence layer.

With this setting, we will save the idempotency key in the sort key instead of the primary key. By default, the primary key will now be set to idempotency#{LAMBDA_FUNCTION_NAME}.

You can optionally set a static value for the partition key using the static_pk_value parameter.

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

1 2 3 | |

The example function above would cause data to be stored in DynamoDB like this:

| id | sort_key | expiration | status | data |

|---|---|---|---|---|

| idempotency#MyLambdaFunction | 1e956ef7da78d0cb890be999aecc0c9e | 1636549553 | COMPLETED | {"user_id": 12391, "message": "success"} |

| idempotency#MyLambdaFunction | 2b2cdb5f86361e97b4383087c1ffdf27 | 1636549571 | COMPLETED | {"user_id": 527212, "message": "success"} |

| idempotency#MyLambdaFunction | f091d2527ad1c78f05d54cc3f363be80 | 1636549585 | IN_PROGRESS |

Bring your own persistent store¶

This utility provides an abstract base class (ABC), so that you can implement your choice of persistent storage layer.

You can create your own persistent store from scratch by inheriting the BasePersistenceLayer class, and implementing _get_record(), _put_record(), _update_record() and _delete_record().

_get_record()– Retrieves an item from the persistence store using an idempotency key and returns it as aDataRecordinstance._put_record()– Adds aDataRecordto the persistence store if it doesn't already exist with that key. Raises anItemAlreadyExistsexception if a non-expired entry already exists._update_record()– Updates an item in the persistence store._delete_record()– Removes an item from the persistence store.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 | |

Danger

Pay attention to the documentation for each - you may need to perform additional checks inside these methods to ensure the idempotency guarantees remain intact.

For example, the _put_record method needs to raise an exception if a non-expired record already exists in the data store with a matching key.

Compatibility with other utilities¶

Batch¶

See Batch integration above.

Validation utility¶

The idempotency utility can be used with the validator decorator. Ensure that idempotency is the innermost decorator.

Warning

If you use an envelope with the validator, the event received by the idempotency utility will be the unwrapped event - not the "raw" event Lambda was invoked with.

Make sure to account for this behavior, if you set the event_key_jmespath.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 | |

Tip: JMESPath Powertools for AWS Lambda (Python) functions are also available

Built-in functions known in the validation utility like powertools_json, powertools_base64, powertools_base64_gzip are also available to use in this utility.

Tracer¶

The idempotency utility can be used with the tracer decorator. Ensure that idempotency is the innermost decorator.

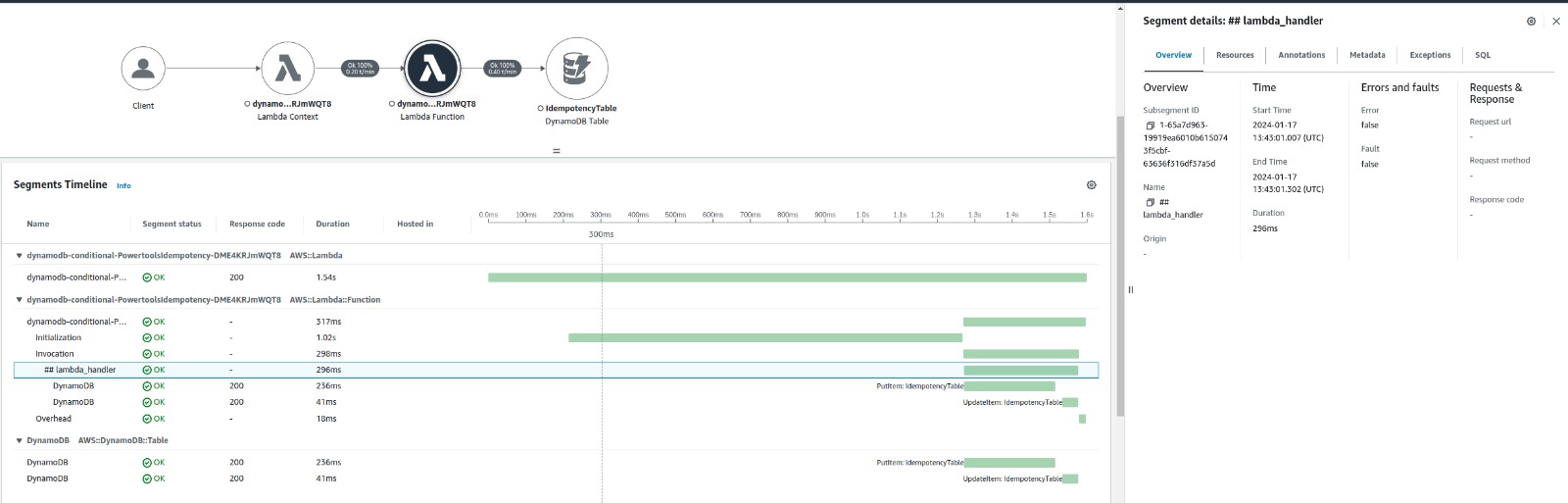

First execution¶

During the first execution with a payload, Lambda performs a PutItem followed by an UpdateItem operation to persist the record in DynamoDB.

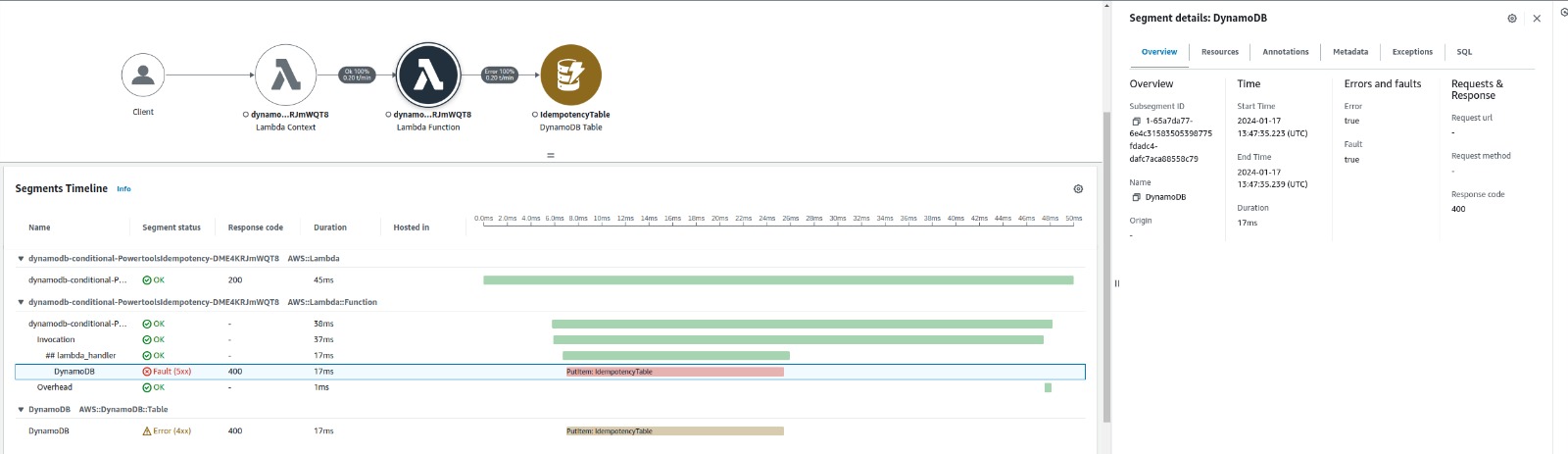

Subsequent executions¶

On subsequent executions with the same payload, Lambda optimistically tries to save the record in DynamoDB. If the record already exists, DynamoDB returns the item.

Explore how to handle conditional write errors in high-concurrency scenarios with DynamoDB in this blog post.

Testing your code¶

The idempotency utility provides several routes to test your code.

Disabling the idempotency utility¶

When testing your code, you may wish to disable the idempotency logic altogether and focus on testing your business logic. To do this, you can set the environment variable POWERTOOLS_IDEMPOTENCY_DISABLED

with a truthy value. If you prefer setting this for specific tests, and are using Pytest, you can use monkeypatch fixture:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

Testing with DynamoDB Local¶

To test with DynamoDB Local, you can replace the DynamoDB client used by the persistence layer with one you create inside your tests. This allows you to set the endpoint_url.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

How do I mock all DynamoDB I/O operations¶

The idempotency utility lazily creates the dynamodb Table which it uses to access DynamoDB. This means it is possible to pass a mocked Table resource, or stub various methods.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

Testing with Redis¶

To test locally, you can either utilize fakeredis-py for a simulated Redis environment or refer to the MockRedis class used in our tests to mock Redis operations.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | |

If you want to set up a real Redis client for integration testing, you can reference the code provided below.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 | |

1 2 3 | |

- Use this script to setup a temp Redis docker and auto remove it upon completion

Extra resources¶

If you're interested in a deep dive on how Amazon uses idempotency when building our APIs, check out this article.