Upgrade guide

End of support v1¶

On March 31st, 2023, Powertools for AWS Lambda (Python) v1 reached end of support and will no longer receive updates or releases. If you are still using v1, we strongly recommend you to read our upgrade guide and update to the latest version.

Given our commitment to all of our customers using Powertools for AWS Lambda (Python), we will keep Pypi v1 releases and documentation 1.x versions to prevent any disruption.

Migrate to v2 from v1¶

We've made minimal breaking changes to make your transition to v2 as smooth as possible.

Quick summary¶

| Area | Change | Code change required | IAM Permissions change required |

|---|---|---|---|

| Batch | Removed legacy SQS batch processor in favour of BatchProcessor. |

Yes | - |

| Environment variables | Removed legacy POWERTOOLS_EVENT_HANDLER_DEBUG in favour of POWERTOOLS_DEV. |

- | - |

| Event Handler | Updated headers response format due to multi-value headers and cookie support. | Tests only | - |

| Event Source Data Classes | Replaced DynamoDBStreamEvent AttributeValue with native Python types. |

Yes | - |

| Feature Flags / Parameters | Updated AppConfig API calls due to GetConfiguration API deprecation. |

- | Yes |

| Idempotency | Updated partition key to include fully qualified function/method names. | - | - |

First Steps¶

All dependencies are optional now. Tracer, Validation, and Parser now require additional dependencies.

Before you start, we suggest making a copy of your current working project or create a new branch with git.

- Upgrade Python to at least v3.8

- Ensure you have the latest version via Lambda Layer or PyPi.

- Review the following sections to confirm whether they affect your code

Legacy SQS Batch Processor¶

We removed the deprecated PartialSQSProcessor class and sqs_batch_processor decorator.

You can migrate to BatchProcessor with the following changes:

- If you use

sqs_batch_decorator, change tobatch_processordecorator - If you use

PartialSQSProcessor, change toBatchProcessor - Enable

ReportBatchItemFailuresin your Lambda Event Source - Change your Lambda Handler to return the new response format

1 2 3 4 5 6 7 8 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

Event Handler headers response format¶

No code changes required

This only applies if you're using APIGatewayRestResolver and asserting custom header values in your tests.

Previously, custom headers were available under headers key in the Event Handler response.

| V1 response headers | |

|---|---|

1 2 3 4 5 | |

In V2, we add all headers under multiValueHeaders key. This enables seamless support for multi-value headers and cookies in fine grained responses.

| V2 response headers | |

|---|---|

1 2 3 4 5 | |

DynamoDBStreamEvent in Event Source Data Classes¶

This also applies if you're using DynamoDB BatchProcessor.

You will now receive native Python types when accessing DynamoDB records via keys, new_image, and old_image attributes in DynamoDBStreamEvent.

Previously, you'd receive a AttributeValue instance and need to deserialize each item to the type you'd want for convenience, or to the type DynamoDB stored via get_value method.

With this change, you can access data deserialized as stored in DynamoDB, and no longer need to recursively deserialize nested objects (Maps) if you had them.

Note

For a lossless conversion of DynamoDB Number type, we follow AWS Python SDK (boto3) approach and convert to Decimal.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | |

Feature Flags and AppConfig Parameter utility¶

No code changes required

We replaced GetConfiguration API (now deprecated) with GetLatestConfiguration and StartConfigurationSession.

As such, you must update your IAM Role permissions to allow the following IAM actions:

appconfig:GetLatestConfigurationappconfig:StartConfigurationSession

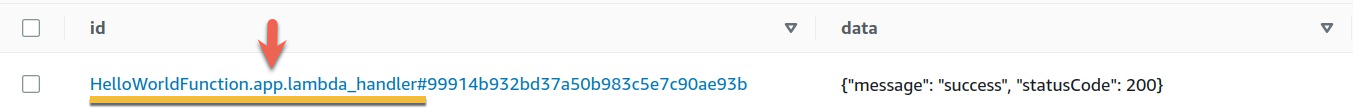

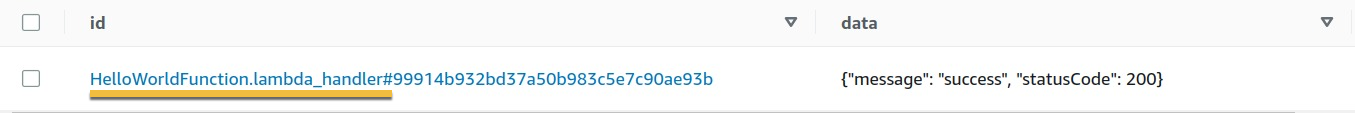

Idempotency partition key format¶

No code changes required

We replaced the DynamoDB partition key format to include fully qualified function/method names. This means that recent non-expired idempotent transactions will be ignored.

Previously, we used the function/method name to generate the partition key value.

e.g.

HelloWorldFunction.lambda_handler#99914b932bd37a50b983c5e7c90ae93b

In V2, we now distinguish between distinct classes or modules that may have the same function/method name.

For example, an ABC or Protocol class may have multiple implementations of process_payment method and may have different results.

e.g.

HelloWorldFunction.app.lambda_handler#99914b932bd37a50b983c5e7c90ae93b