Metrics

Metrics creates custom metrics asynchronously by logging metrics to standard output following Amazon CloudWatch Embedded Metric Format (EMF).

These metrics can be visualized through Amazon CloudWatch Console.

Key features¶

- Aggregate up to 100 metrics using a single CloudWatch EMF object (large JSON blob)

- Validate against common metric definitions mistakes (metric unit, values, max dimensions, max metrics, etc)

- Metrics are created asynchronously by CloudWatch service, no custom stacks needed

- Context manager to create a one off metric with a different dimension

Terminologies¶

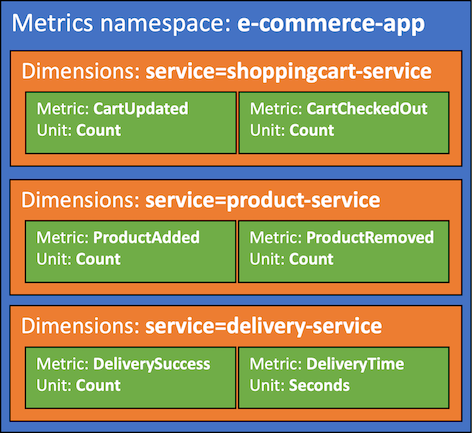

If you're new to Amazon CloudWatch, there are two terminologies you must be aware of before using this utility:

- Namespace. It's the highest level container that will group multiple metrics from multiple services for a given application, for example

ServerlessEcommerce. - Dimensions. Metrics metadata in key-value format. They help you slice and dice metrics visualization, for example

ColdStartmetric by Paymentservice.

Getting started¶

Tip

All examples shared in this documentation are available within the project repository.

Metric has two global settings that will be used across all metrics emitted:

| Setting | Description | Environment variable | Constructor parameter |

|---|---|---|---|

| Metric namespace | Logical container where all metrics will be placed e.g. ServerlessAirline |

POWERTOOLS_METRICS_NAMESPACE |

namespace |

| Service | Optionally, sets service metric dimension across all metrics e.g. payment |

POWERTOOLS_SERVICE_NAME |

service |

Tip

Use your application or main service as the metric namespace to easily group all metrics.

| AWS Serverless Application Model (SAM) example | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | |

Note

For brevity, all code snippets in this page will rely on environment variables above being set.

This ensures we instantiate metrics = Metrics() over metrics = Metrics(service="booking", namespace="ServerlessAirline"), etc.

Creating metrics¶

You can create metrics using add_metric, and you can create dimensions for all your aggregate metrics using add_dimension method.

Tip

You can initialize Metrics in any other module too. It'll keep track of your aggregate metrics in memory to optimize costs (one blob instead of multiples).

1 2 3 4 5 6 7 8 9 10 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

Tip: Autocomplete Metric Units

MetricUnit enum facilitate finding a supported metric unit by CloudWatch. Alternatively, you can pass the value as a string if you already know them e.g. unit="Count".

Note: Metrics overflow

CloudWatch EMF supports a max of 100 metrics per batch. Metrics utility will flush all metrics when adding the 100th metric. Subsequent metrics (101th+) will be aggregated into a new EMF object, for your convenience.

Warning: Do not create metrics or dimensions outside the handler

Metrics or dimensions added in the global scope will only be added during cold start. Disregard if you that's the intended behavior.

Adding multi-value metrics¶

You can call add_metric() with the same metric name multiple times. The values will be grouped together in a list.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | |

Adding default dimensions¶

You can use set_default_dimensions method, or default_dimensions parameter in log_metrics decorator, to persist dimensions across Lambda invocations.

If you'd like to remove them at some point, you can use clear_default_dimensions method.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

Flushing metrics¶

As you finish adding all your metrics, you need to serialize and flush them to standard output. You can do that automatically with the log_metrics decorator.

This decorator also validates, serializes, and flushes all your metrics. During metrics validation, if no metrics are provided then a warning will be logged, but no exception will be raised.

1 2 3 4 5 6 7 8 9 10 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | |

Tip: Metric validation

If metrics are provided, and any of the following criteria are not met, SchemaValidationError exception will be raised:

- Maximum of 29 user-defined dimensions

- Namespace is set, and no more than one

- Metric units must be supported by CloudWatch

Raising SchemaValidationError on empty metrics¶

If you want to ensure at least one metric is always emitted, you can pass raise_on_empty_metrics to the log_metrics decorator:

| Raising SchemaValidationError exception if no metrics are added | |

|---|---|

1 2 3 4 5 6 7 8 9 10 | |

Suppressing warning messages on empty metrics

If you expect your function to execute without publishing metrics every time, you can suppress the warning with warnings.filterwarnings("ignore", "No metrics to publish*").

Capturing cold start metric¶

You can optionally capture cold start metrics with log_metrics decorator via capture_cold_start_metric param.

1 2 3 4 5 6 7 8 9 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | |

If it's a cold start invocation, this feature will:

- Create a separate EMF blob solely containing a metric named

ColdStart - Add

function_nameandservicedimensions

This has the advantage of keeping cold start metric separate from your application metrics, where you might have unrelated dimensions.

Info

We do not emit 0 as a value for ColdStart metric for cost reasons. Let us know if you'd prefer a flag to override it.

Advanced¶

Adding metadata¶

You can add high-cardinality data as part of your Metrics log with add_metadata method. This is useful when you want to search highly contextual information along with your metrics in your logs.

Info

This will not be available during metrics visualization - Use dimensions for this purpose

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | |

Single metric with a different dimension¶

CloudWatch EMF uses the same dimensions across all your metrics. Use single_metric if you have a metric that should have different dimensions.

Info

Generally, this would be an edge case since you pay for unique metric. Keep the following formula in mind:

unique metric = (metric_name + dimension_name + dimension_value)

1 2 3 4 5 6 7 8 9 10 11 12 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | |

Flushing metrics manually¶

If you prefer not to use log_metrics because you might want to encapsulate additional logic when doing so, you can manually flush and clear metrics as follows:

Warning

Metrics, dimensions and namespace validation still applies

| Manually flushing and clearing metrics from memory | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 | |

Testing your code¶

Environment variables¶

Tip

Ignore this section, if:

- You are explicitly setting namespace/default dimension via

namespaceandserviceparameters - You're not instantiating

Metricsin the global namespace

For example, Metrics(namespace="ServerlessAirline", service="booking")

Make sure to set POWERTOOLS_METRICS_NAMESPACE and POWERTOOLS_SERVICE_NAME before running your tests to prevent failing on SchemaValidation exception. You can set it before you run tests or via pytest plugins like dotenv.

| Injecting dummy Metric Namespace before running tests | |

|---|---|

1 | |

Clearing metrics¶

Metrics keep metrics in memory across multiple instances. If you need to test this behavior, you can use the following Pytest fixture to ensure metrics are reset incl. cold start:

| Clearing metrics between tests | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

Functional testing¶

You can read standard output and assert whether metrics have been flushed. Here's an example using pytest with capsys built-in fixture:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

1 2 3 4 5 6 7 8 9 10 | |

This will be needed when using capture_cold_start_metric=True, or when both Metrics and single_metric are used.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | |

1 2 3 4 5 6 7 8 9 10 | |

Tip

For more elaborate assertions and comparisons, check out our functional testing for Metrics utility.